It’s happened to you, I’m sure, as it has happened to me. You go about your life, working on your computer, editing files, processing data. You don’t get up in the morning thinking that today will be the day. The day that you fire up your computer, with all the hopes of getting that paper written, and instead are faced with the Blue Screen of Death. A message blinks “Can not find hard drive” or something equally vague and frightening.

It happened to me one winter evening in

I ran into our friendly computer tech support director Harold the other day. It is because of Harold’s relentless campaign on backing up data that inspired me to burn all my work onto a CD every week. But it makes me wonder about the psychology involved in backing up one’s data. Even after my experience, I still don’t always back up my data. Some Fridays I just feel like going home – “I’ll do it tomorrow.” And I suppose one of these tomorrow’s I will fire up the machine and get that screen of death.

And who will I call? Harold. And often, what can Harold do? Nothing. This is not because Harold isn’t a great IT person – it is because Harold isn’t Zeus and can not pull a dues ex machina, coming down to earth and intervening with the ones and zeros that constitute the information on the hard drive.

I recently wrote a post about

Electronics tend to follow an inverse power law in terms of their life-span. That is, take a hundred iPods. Once they leave the factory, 100 of them work. A month later, 99 work (i.e., you may have dropped it in the toilet by accident). A year later 80 of them work, and so on, until only 1 is still working some years down the line. And this is okay, because electronics are replaced with newer gadgets. But it is the probability of failure I am concerned with here: All electronic devices will someday fail. But why are we so confident that on any given time that you shut down your machine without backing up your data, that tomorrow when you start it, that the machine will in fact work rather than result in the blue screen of death.

I think the answer has to do with the law of succession, the same problem faced by Adam and Eve in the Garden of Eden. When you first buy your computer and turn it on, it is as if your computer gives you a white marble which you place in a box. Already in the box is a Blue Marble of Death, because you don’t know whether or not it will ever turn on again as it could be a lemon. Every time you start your computer and it works, your computer gives you another white marble from its box which you place in yours. Eventually your box will contain a lot of white marbles, representing your confidence in your computer.

But your computer doesn’t see it this way. Your computer is doing something different. It starts off with a lot of white marbles in its box, and one blue marble of death. And every time you turn it on, it selects a white marble from the box, and doesn’t replace it – it gives it to you, improving your confidence in it. So it may begin with a thousand white marbles and one blue marble of death. And the next time you turn it on, it selects a marble from its box. With high probability it is a white marble. But with every successive selection from this box, the probability increases and increases that the blue marble will be chosen until that day when suddenly, and unexpectedly, you turn on the computer, with a very high confidence in it working, while simultaneously, the computer selects the blue marble.

The result? You’re screwed.

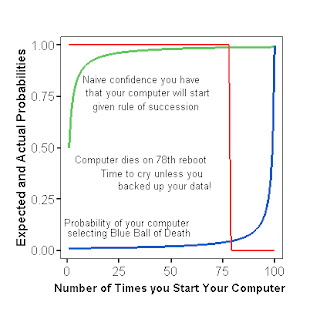

The following figure provides a depiction of the expected probability that you have that your computer will start given a certain number of prior starts (by the rule of succession), the expected probability that your computer will select the blue ball of death randomly without replacement on any given start up. And then we encounter the real world. This hypothetical computer selected the white ball of hope all the way up to reboot 77. Then, on reboot 78, the computer selected the Blue Ball of Death, and CRASH! There went your work (unless you backed it up).

Do we reason this way? People are bad as assessing the probability and success and failure, which is why do many people gamble despite the odds. Hope springs eternal in the Garden of Eden, in

Your choice.